DockerHub’s impending download rate limit presents an interesting challenge for some.

From hobbyists to open core ecosystems, projects are trying to find ways insulate their users.

For my projects, I chose to deploy a simple registry mirror.

One nice thing about this project is that the system is largely stateless (and cheap to run).

The docker-registry and docker-auth projects are horizontally scalable.

The only stateful system you really need to manage is a cache (which isn’t mission critical).

While Harbor was appealing, it had a lot more overhead than what I needed.

In this post, I’ll walk you through my deployment.

Prerequisites

- An Amazon S3 like system (minio)

- A Kubernetes cluster

- Required (or equivalent): Ingress

- Optional: Persistent Volumes

- My Stack: cert-manager, ingress-nginx, and external-dns.

- A domain or subdomain to work on.

- If running locally, take a look at how to setup a local domain with kind.

Overview

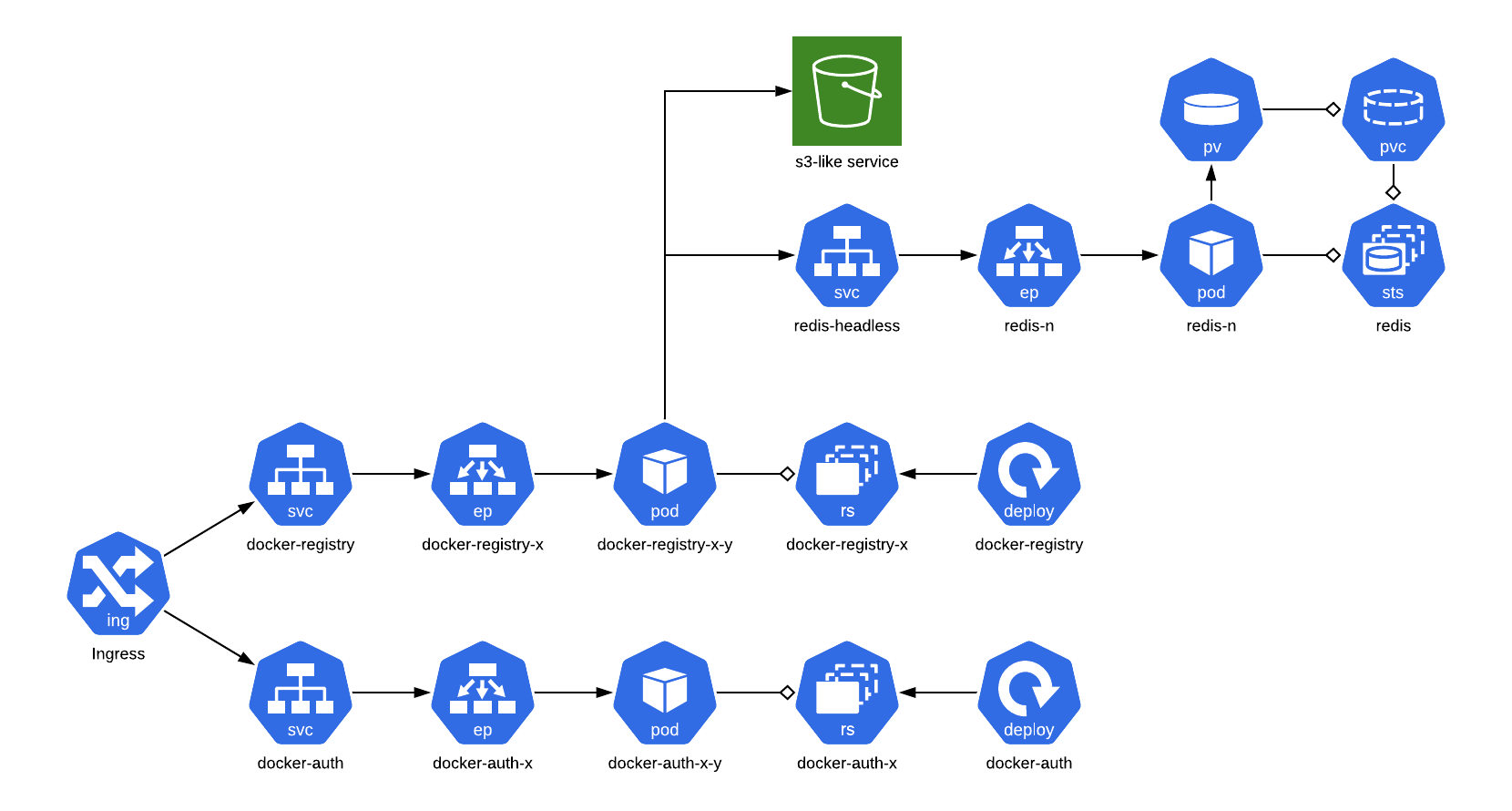

Unlike many of my posts, I tried to externalize resources this time around instead of inlining them. All the configuration used in this project can be found here. Below, you’ll find an overview of the ecosystem.

First, we’ll deploy a simple Redis cluster. Once up and running, we’ll deploy a read-only registry mirror. Finally, we’ll add token authentication to lock down repo access.

Configuration

Before getting into things, let’s prepare our configuration. These values will be used throughout the process and should be unique to your deployment.

# s3 configuration

export AWS_ACCESS_KEY_ID="???"

export AWS_SECRET_ACCESS_KEY="???"

export S3_REGION=""

export S3_ENDPOINT=""

export S3_BUCKET=""

# obtained from https://hub.docker.com/settings/security

export DOCKERHUB_USERNAME=""

export DOCKERHUB_ACCESS_TOKEN=""

# only allow images from mjpitz to be pulled

export DOCKERHUB_ALLOWED="mjpitz"

export REDIS_PASSWORD=$(uuidgen)In addition to that configuration, we will need to tap a couple Helm repositories.

stable provides a well-supported docker-registry chart.

bitnami provides a couple redis charts sufficient for use with the registry.

helm repo add stable https://kubernetes-charts.storage.googleapis.com

helm repo add bitnami https://charts.bitnami.com/bitnami

helm repo updateDeploy a redis cluster

First things first, docker-registry supports caching blobs read from the S3 backend.

It supports two types of drivers: inmemory and redis.

For our deployment, we’ll be using redis.

Bitnami provides a redis and a redis-cluster chart.

The redis chart offers a primary-replica scheme.

A redis-cluster provides multiple primaries, improving the availability of writes.

For our sake, we’re just going to use the redis chart.

All we need to do, is provide a password.

helm upgrade -i redis bitnami/redis --version 11.2.3 \

--set password=${REDIS_PASSWORD}Deploy the registry

Once we have Redis and S3 ready, we should be able to deploy the registry. For this, you will need my 01-docker-registry-values.yaml file from the gist. This file configures the registry to:

- Store data in an S3 bucket

- Cache blobs in redis

- Run in a readonly mode (toggled by setting

configData.storage.maintenance.readonly.enabled=false) - Proxy requests to DockerHub (toggled by setting

configData.proxy=)

All we need to do is plug in our values from earlier.

helm upgrade -i docker-registry stable/docker-registry --version 1.9.4 \

-f 01-docker-registry-values.yaml \

--set secrets.s3.accessKey=${AWS_ACCESS_KEY_ID} \

--set secrets.s3.secretKey=${AWS_SECRET_ACCESS_KEY} \

--set s3.region=${S3_REGION} \

--set s3.regionEndpoint=${S3_ENDPOINT} \

--set s3.bucket=${S3_BUCKET} \

--set configData.redis.password=${REDIS_PASSWORD} \

--set configData.proxy.username=${DOCKERHUB_USERNAME} \

--set configData.proxy.password=${DOCKERHUB_ACCESS_TOKEN}Just like that, you should have a registry mirror up and running. You can test this by port-forwarding to the pod and attempting to pull any image from DockerHub. While being able to pull data from DockerHub is great, this could be used maliciously. For example, someone could start pulling every image, for every tag on DockerHub. This can result in bloating your image registry with images you didn’t intend to host.

Adding authentication

docker_auth was built to fill in the authentication (authn) and authorization (authz) gap for Docker.

It was one of the first systems to support Docker’s token authentication flow.

The flow uses signed JWT tokens to verify identity claims by clients.

In order for it to work, we need to generate a private key and certificate.

Together, these are used to sign JWT token.

Using the commands below, we can generate the key and certificate.

Be sure to change the -subj line to something appropriate for your project.

openssl req -new -newkey rsa:4096 -days 5000 -nodes -x509 \

-subj "/C=DE/ST=BW/L=Mannheim/O=ACME/CN=docker-auth" \

-keyout tls.key \

-out tls.crt

kubectl create secret tls auth-credentials --cert=tls.crt --key=tls.keyOnce our auth-credentials secret has been configured, we can deploy the docker-auth system.

For this example, we’re going to restrict anonymous pulls to a DockerHub user or group of our choosing (DOCKERHUB_ALLOWED).

This means that users who are unauthenticated will only be allowed to pull images from these specified groups.

For this, you will need 02-docker-auth-values.yaml from the gist.

git clone https://github.com/cesanta/docker_auth.git

helm upgrade -i docker-auth ./docker_auth/chart/docker-auth \

-f 02-docker-auth-values.yaml \

--set configmap.data.acl[0].match.name="${DOCKERHUB_ALLOWED}/*" Once running, you should be able to issue /auth requests against the pod.

The endpoint should return a pair of tokens that represent an unauthenticated user.

Deploy an ingress

Now that both docker-registry and docker-auth are running, let’s connect them.

In kubernetes, an Ingress is a great way to provide application layer (L7) routing to applications.

It supports both host and path based routing.

In this post we’ll configure the paths of an ingress to route to their appropriate backend.

/auth, /google_auth, and /github_auth will all route to the docker-auth project.

/v2 will route to the docker-registry project.

To do this, we will need 03-ingress.yaml from the gist.

Be sure to change the host to your project domain.

kubectl apply -f 03-ingress.yamlOnce applied, you should be able to start working with the ingress definition.

Requests to /auth should resolve from docker-auth.

Requests to /v2/_catalog should resolve from docker-registry.

Remember, some annotations on the ingress are specific to my tech stack.

You may need to find the equivalent for yours.

Connecting docker-registry to docker-auth

Now that the client can speak to docker-auth and docker-registry, we can connect the two.

To do so, the docker-registry needs access to the certificate used by docker-auth.

This is used to validate the JWT token.

We can update docker-registry-values.yaml to volume mount the file in.

Then, we just need to point the auth block of configuration at the proper endpoints.

Below is a diff of the changes needed to add auth.

encrypt: false

securet: true

+ extraVolumeMounts:

+ - name: auth

+ mountPath: "/etc/docker-auth/ssl/"

+ readOnly: true

+

+ extraVolumes:

+ - name: auth

+ secret:

+ secretName: auth-credentials

+

# see https://docs.docker.com/registry/configuration/

configData:

+ auth:

+ token:

+ realm: "https://ocr.sh/auth"

+ service: "ocr.sh"

+ issuer: "My Auth" # must match issuer from docker-auth

+ rootcertbundle: /etc/docker-auth/ssl/tls.crt

+

http:

addr: :5000

debug:Alternatively, you can grab an updated copy from 04-docker-registry-values-yaml in the gist.

Remember to set your realm, service, issuer and rootcertbundle appropriately.

helm upgrade -i docker-registry stable/docker-registry --version 1.9.4 \

-f 04-docker-registry-values.yaml \

--set secrets.s3.accessKey=${AWS_ACCESS_KEY_ID} \

--set secrets.s3.secretKey=${AWS_SECRET_ACCESS_KEY} \

--set s3.region=${S3_REGION} \

--set s3.regionEndpoint=${S3_ENDPOINT} \

--set s3.bucket=${S3_BUCKET} \

--set configData.redis.password=${REDIS_PASSWORD} \

--set configData.proxy.username=${DOCKERHUB_USERNAME} \

--set configData.proxy.password=${DOCKERHUB_ACCESS_TOKEN}Once your release has been upgraded, the registry should deny any requests for images outside of your configured group.

For example, I wound up with ocr.sh for my projects domain.

I configured DOCKERHUB_ALLOWED to only allow unauthenticated users to pull depscloud/*.

This allows my project consumers to continue to use my product without being rate limited by GitHub.

Under the hood, all requests to DockerHub are authenticated.

In addition to that, I have tight control over what repositories I allow to be pulled through my proxy.

Thanks for reading! I hope you learned something by stopping by and reading this post.